Introduction #

When using csi-driver-nfs as a Persistent Volume (PV) in Kubernetes, specifying the capacity in a Persistent Volume Claim (PVC) does not reflect the actual capacity.

Similar issues have been reported in the following issues:

- https://github.com/kubernetes-csi/csi-driver-nfs/issues/427

- https://github.com/kubernetes-csi/csi-driver-nfs/issues/517

- https://github.com/kubernetes/kubernetes/issues/124159

1. The Problem #

Let’s see what the actual problem is.

1. Specifying a Capacity of 2Gi

kubectl describe pvc datadir-mysql-cluster-0 -n dev

Name: datadir-mysql-cluster-0

Namespace: dev

StorageClass: nfs-csi

Status: Bound

Volume: pvc-0d5ef0cf-be39-4fe4-8c7d-ba2fcb53229d

### Omitted ###

Capacity: 2Gi

Access Modes: RWO

VolumeMode: Filesystem

Used By: mysql-cluster-0

2. Capacity is not 2Gi

The entire capacity of the NFS is exposed, and more than 2Gi can be used without any issues.

kubectl exec -it mysql-cluster-0 -n dev -c mysql -- /bin/bash

bash-4.4$ df -h

Filesystem Size Used Avail Use% Mounted on

### Omitted ###

192.168.1.204:/mnt/tank/lun1/pvc-0d5ef0cf-be39-4fe4-8c7d-ba2fcb53229d 872G 17G 856G 2% /var/lib/mysql

2. The Cause #

This is due to the following specifications of NFS:

- PVC is a folder within the NFS mounted on the node/pod, and even if a specific directory of the NFS is mounted, the usage of the entire filesystem is displayed.

- NFS itself does not have a quota feature to limit the capacity of specific folders. Therefore, the

Capacitylimit cannot be enforced.

PVC is a folder within NFS

As shown below, a specific folder of the NFS is mounted in the pod (in this case, /var/lib/mysql), and the capacity is 872G.

bash-4.4$ df -h

Filesystem Size Used Avail Use% Mounted on

192.168.1.204:/mnt/tank/lun1/pvc-0d5ef0cf-be39-4fe4-8c7d-ba2fcb53229d 872G 17G 856G 2% /var/lib/mysql

Indeed, the NFS (TrueNAS) being used has a volume of 872G created at /mnt/tank/lun1.

root@truenas[~]# df -h

Filesystem Size Used Avail Capacity Mounted on

tank/lun0 865G 8.7G 856G 1% /mnt/tank/lun0

tank/lun1 872G 16G 856G 2% /mnt/tank/lun1

tank/lun2 936G 80G 856G 9% /mnt/tank/lun2

Furthermore, looking at /mnt/tank/lun1 being used, it is clear that multiple PVs are created as folders.

root@truenas[/mnt/tank/lun1]# ls -al | grep pvc | head -n 10

drwxrwsr-x 5 root 1000 7 Aug 24 03:13 pvc-02746503-d9d9-425c-a833-d626dd8b7c6d

drwx--S--- 8 27 27 38 Apr 18 23:39 pvc-05c6524f-3b5b-4fb8-9854-49ea0c47a9c1

drwxrwsr-x 5 root 1000 7 Aug 24 06:36 pvc-05f7474d-2394-4af5-93a2-0926b9c6f42a

drwx--S--- 8 27 27 35 Apr 13 19:25 pvc-0730e5a5-bd13-4c6a-970b-b2c00e4180e8

drwx--S--- 8 27 27 35 Apr 21 00:32 pvc-07a95b07-11e4-42f9-bb48-27e115f54411

drwxr-xr-x 2 root wheel 2 Jun 22 09:02 pvc-07aad2bb-c1c3-40e4-8fef-e82659272d41

Therefore, since this folder is mounted as a PV, the Capacity limit is ineffective, and the entire capacity is visible.

NFS itself does not have a quota feature

If a folder is mounted, it would be ideal to implement a capacity limit on the folder, but unfortunately, the NFS protocol itself does not have a quota (capacity limit) feature.

Therefore, folder-specific capacity limits cannot be enforced, making the Capacity limit ineffective and exposing the entire capacity.

Although NFS itself does not have a capacity limit feature, services like rquotad that impose capacity limits on server folders do exist. RedHat official docs

Using this, capacity limits can be implemented, but since it only runs on the NFS server, the client side (pod) cannot verify that this limit is in place.

Conclusion #

Be aware that the allocated capacity to the pod and the actual capacity may differ, causing monitoring to malfunction.

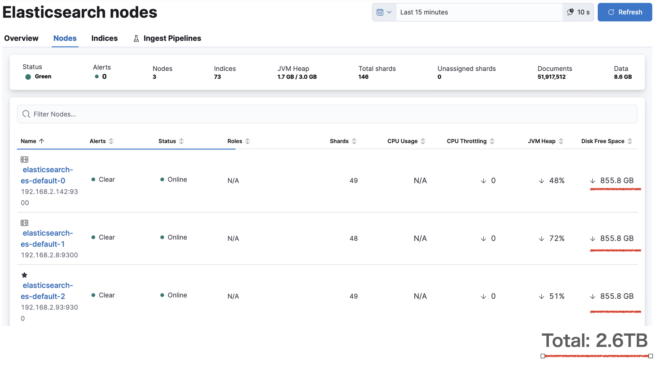

In my environment, I was using NFS as the PV for the ElasticSearch pod. However, the capacity differs from the actual capacity, and since it is allocated from the same NFS, it appears to be 2.6TB, which is three times the actual capacity.